This page contains some basic OpenCV programs that I have created during learning the base concepts and core functions of this library. They are mostly written in C++ as this is the native language of OpenCV. I am using the latest OpenCV version: 3.2. Formerly I also used C but the C++ interface is cleaner and this has the main development focus since OpenCV 2.x. I have also tried Java: there is a desktop and an Android version. The latter becomes more and more popular.

An older OpenCV project using Android and Lego NXT robot that I created years ago can be found here.

It is always hard to get OpenCV working. For the last time I have followed this tutorial to get OpenCV working on Ubuntu 16.04.

I have installed to /usr/share/opencv with the following commands (It was not the first attempt).

cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/share/opencv -D BUILD_DOCS=ON /home/rics/tmp/opencv-3.2.0 make -j7 sudo make install

After that /usr/share/opencv/include contains the header files and /usr/share/opencv/lib

contains the libraries to be dynamically linked, in other words the .so files.

For easier use I have configured OpenCV with pkg-config as follows.

export OPENCV_HOME=/usr/share/opencv export PKG_CONFIG_PATH=$PKG_CONFIG_PATH:$OPENCV_HOME/lib/pkgconfig

Then pkg-config --cflags opencv and pkg-config --libs opencv is enough to determine the location

of the include and the library directory of OpenCV. (In Netbeans the latter automatically causes the former.)

Finally the following 3 libraries need to be linked:

opencv_core, opencv_highgui, opencv_imgproc.

For using OpenCV with Java wrapper functions are created that calls C++ interface elements.

This Java layer above C++ is defined in /usr/share/opencv/share/OpenCV/java, a directory that

is created during OpenCV compilation.

This directory contains an opencv-320.jar to be referenced from the java project when the Java classes are compiled.

It means that opencv-320.jar has to be on the classpath.

Moreover this directory should also be on the Java library path because it contains the libopencv_java320.so dynamic library

that links Java and C++ code together.

Library path can be set like this in Linux before the first execution:

export LD_LIBRARY_PATH=/usr/share/opencv/share/OpenCV/java

or like this during program start:

java -Djava.library.path="/usr/share/opencv/share/OpenCV/java" -jar JavaOpenCVTest.jar.

The simplest OpenCV program. Opens the chessboard.jpg in a window from the images directory using imread and imshow highgui functions

and waits for a keypress.

It is important to note that OpenCV has built-in garbage collection so deallocating the img object is not necessary.

Opening the default web camera and showing its image in a window.

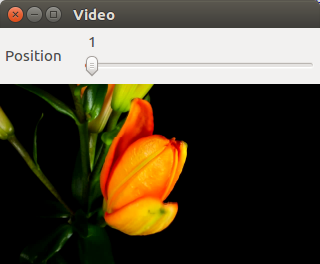

In the following program a video is displayed in a window. The window contains a trackbar at the top.

The actual position of the trackbar is stored in g_slider_position and continuously update

with setTrackbarPos.

If the user moves the position then the video position is updated accordingly with the help of

the onTrackbarSlide callback function.

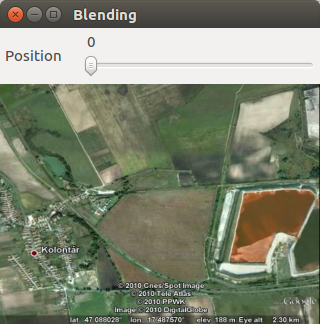

There are really powerful image manipulation methods in OpenCV. A simple, yet spectacular one is addWeighted

with which two image matrices are mixed together. The weight of the components is determined by the user with a trackbar.

The example is taken from here.

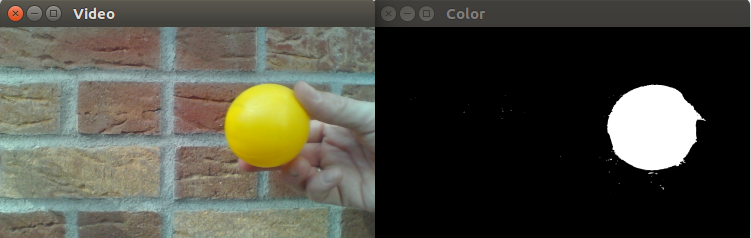

Another image processing method is thresholding with inRange function.

In this case pixels falling into a selected range will marked as white,

all other pixels stay black in the resulting image.

This process is performed by findColor below after a conversion from RGB to HSV

color space.

HSV (hue-saturation-value) color space is better suited to select a certain perceived color than RGB.

In the following example the selected color is yellow with hue interval (30,70) on the (0,360) scale.

The video capture shows the original and the thresholded frames as well.

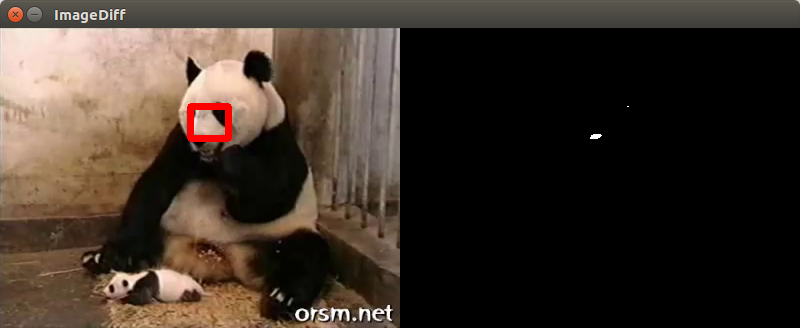

There are several ways

to detect changes and track object movement with OpenCV.

A simple solution is the image difference program below. First it creates a large img matrix,

its left (left_img) will show the original video frames and its right (right_img)

will show the changing pixels in white color.

Detection of the change is performed by diffImage.

This function splits the current and the previous frame to their BGR channels, blurs them with boxFilter

and then calculates the absolute difference (absDiff) pixel by pixel

in each channel. The result is stored in the one-channel matrix res.

Then res is further processed with the compare function.

If the absolute difference is bigger than 20

then the resulting pixel will be 255.

Based on diffImage's result a bounding rectangle of the movements

is calculated with calculateMovementCoordinates and rectangle is copied onto left_img.

Finally res_img is copied onto each channel of the BGR right_img to get a black and white frame.

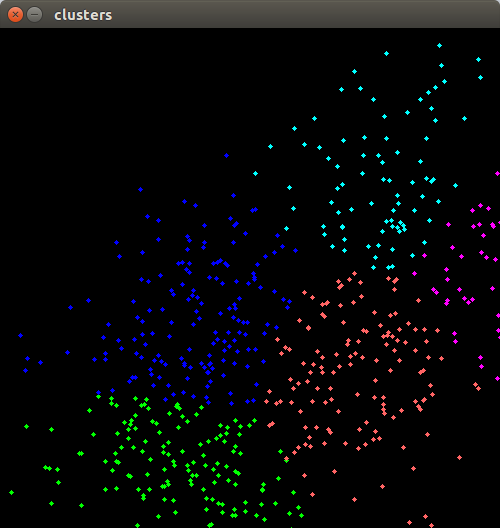

As images are represented as large matrices OpenCV is equally useful for more general matrix manipulation. The following program groups 2D points with the k-means clustering algorithm. It is based on an example found in Gary Bradski and Adrian Kaehler's Learning OpenCV: Computer Vision with the OpenCV Library book (Published by O'Reilly Media, 2008).

After generating randomly distributed points kmeans function groups them into

cluster_count clusters based on their distance to each other.

Points of clusters are then displayed by different color in an image.

OpenCV supports other languages than C++.

For Java wrapper functions need to be available as described above in the Configuration.

The example below only sets and reads some matrix elements, the important point is the inclusion of the native interface with

static{ System.loadLibrary(Core.NATIVE_LIBRARY_NAME); }.